Google Product Search vs Vertex AI multimodal embeddings

Google offers a managed service for searching with images to find similar products called Product Search. It's a quite neat service that allows you to do product search without manually embedding images, setting up a vector database, manipulating images etc. The pricing is quite predictable with 1 USD per 10000 images stored and 4.50 USD per 1000 queries using images. The service has not been updated much by Google, and it has no graphical interface in the Cloud Console, which makes it a bit hard to use. This, together with the first generation of Google Cloud AI services, seem to be neglected in favor of the new Vertex AI.

In this article I will compare the performance of managed Product Search with embedding images myself with Google Vertex's multimodal embedding. These two solutions will be referred to as Product Search and Vertex.

Methodology

I got target images from 380 products from one store categorized as "Eggs and diary" which form the search space. Then I found 13 test images of products from other stores or offer catalogs, all 13 judged by me to belong to the "Eggs and diary" category.

For Product Search the target images were indexed and set to the "general" category with no extra tags. Then the test images were queried to the product collection.

For Vertex multimodal the images were sent to the multimodal@001 embedding endpoint with only the image and no text. The test images were queried with scikit-learn NearestNeighbors (k=3) and cosine similarity.

Results

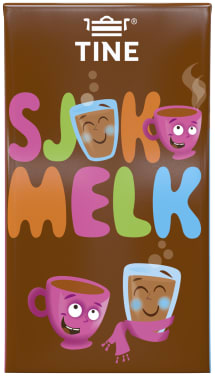

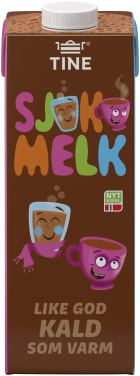

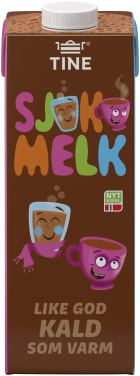

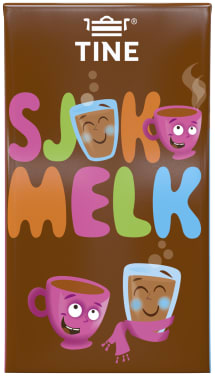

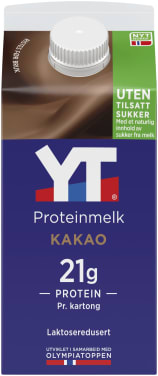

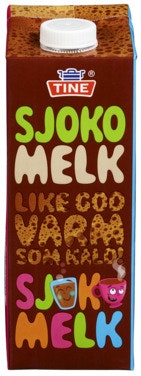

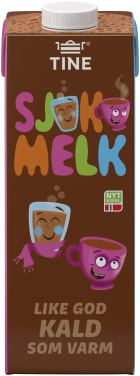

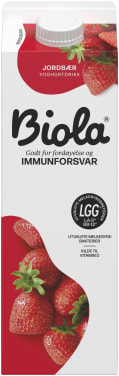

Test image

Results Product Search

Score: 0.53

Score: 0.53 Score: 0.52

Score: 0.52 Score: 0.51

Score: 0.51Results Vertex multimodal embedding

Score: 0.75

Score: 0.75 Score: 0.74

Score: 0.74 Score: 0.73

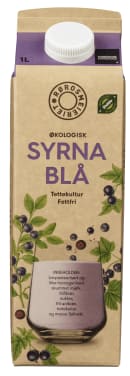

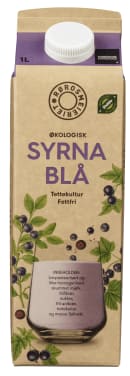

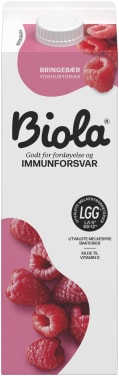

Score: 0.73Test image

Results Product Search

Score: 0.46

Score: 0.46 Score: 0.46

Score: 0.46 Score: 0.34

Score: 0.34Results Vertex multimodal embedding

Score: 0.73

Score: 0.73 Score: 0.68

Score: 0.68 Score: 0.65

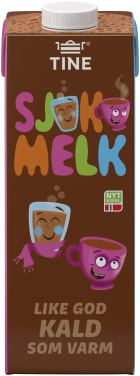

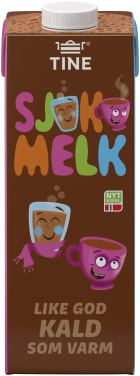

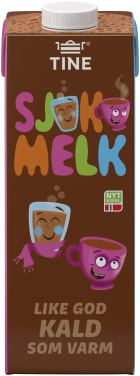

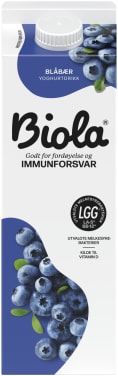

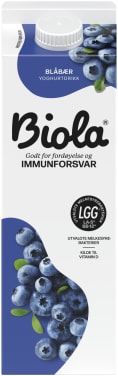

Score: 0.65Test image

Results Product Search

Score: 0.47

Score: 0.47 Score: 0.46

Score: 0.46 Score: 0.45

Score: 0.45Results Vertex multimodal embedding

Score: 0.60

Score: 0.60 Score: 0.56

Score: 0.56 Score: 0.55

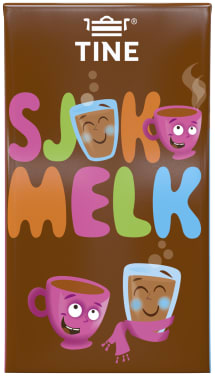

Score: 0.55Test image

Results Product Search

Score: 0.39

Score: 0.39 Score: 0.38

Score: 0.38 Score: 0.36

Score: 0.36Results Vertex multimodal embedding

Score: 0.69

Score: 0.69 Score: 0.67

Score: 0.67 Score: 0.65

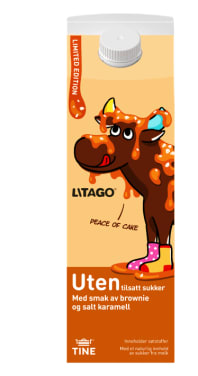

Score: 0.65Test image

Results Product Search

Score: 0.66

Score: 0.66 Score: 0.66

Score: 0.66 Score: 0.55

Score: 0.55Results Vertex multimodal embedding

Score: 0.57

Score: 0.57 Score: 0.57

Score: 0.57 Score: 0.56

Score: 0.56Test image

Results Product Search

Score: 0.30

Score: 0.30 Score: 0.30

Score: 0.30 Score: 0.30

Score: 0.30Results Vertex multimodal embedding

Score: 0.51

Score: 0.51 Score: 0.46

Score: 0.46 Score: 0.46

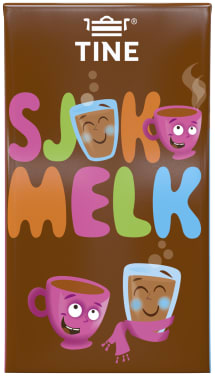

Score: 0.46Test image

Results Product Search

Score: 0.45

Score: 0.45 Score: 0.42

Score: 0.42 Score: 0.42

Score: 0.42Results Vertex multimodal embedding

Score: 0.60

Score: 0.60 Score: 0.57

Score: 0.57 Score: 0.57

Score: 0.57Test image

Results Product Search

Score: 0.80

Score: 0.80 Score: 0.44

Score: 0.44 Score: 0.37

Score: 0.37Results Vertex multimodal embedding

Score: 0.88

Score: 0.88 Score: 0.87

Score: 0.87 Score: 0.77

Score: 0.77Test image

Results Product Search

Score: 0.90

Score: 0.90 Score: 0.88

Score: 0.88 Score: 0.85

Score: 0.85Results Vertex multimodal embedding

Score: 0.95

Score: 0.95 Score: 0.91

Score: 0.91 Score: 0.87

Score: 0.87Test image

Results Product Search

Score: 0.47

Score: 0.47 Score: 0.47

Score: 0.47 Score: 0.39

Score: 0.39Results Vertex multimodal embedding

Score: 0.90

Score: 0.90 Score: 0.86

Score: 0.86 Score: 0.78

Score: 0.78Test image

Results Product Search

Score: 0.85

Score: 0.85 Score: 0.46

Score: 0.46 Score: 0.44

Score: 0.44Results Vertex multimodal embedding

Score: 0.93

Score: 0.93 Score: 0.66

Score: 0.66 Score: 0.65

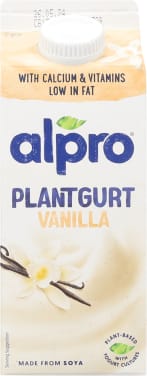

Score: 0.65Test image

Results Product Search

Score: 0.52

Score: 0.52 Score: 0.48

Score: 0.48 Score: 0.46

Score: 0.46Results Vertex multimodal embedding

Score: 0.60

Score: 0.60 Score: 0.59

Score: 0.59 Score: 0.58

Score: 0.58Test image

Results Product Search

Score: 0.41

Score: 0.41 Score: 0.38

Score: 0.38 Score: 0.37

Score: 0.37Results Vertex multimodal embedding

Score: 0.61

Score: 0.61 Score: 0.61

Score: 0.61 Score: 0.60

Score: 0.60Discussion

Both solutions get most of the images right, and rank some images in a slightly different order when they have it right.

For "Tine Lettmelk med smak" and "Biola skogsbær/vanilje", only Product Search found a good match, while Vertex did not. These images were from offer catalogs, and had text in the foreground, which probably made the embeddings less likely to identify only the product image.

For "Philadelphia", there was no good match in the search space, so both solutions found other matches, these matches having lower scores than for images where there were good matches. Both ranked butter or margarine highest.

For "Smøremyk", which had no very visually similar matches, both found similar products, i.e. butter-imitation spreads.

Conclusion

It seems that Product Search offers a better solution than using only multimodal embeddings and KNN queries. Perhaps Product Search does something in its pipeline to identify objects and extract the most relevant part of an image. For the Vertex solution to be equally good, we might have to preprocess images to identify products before embedding them.

On pricing and management

Product search is a ready to use service, where experts at Google have made a good solution for the specific purpose of recognizing similar products from images. One only has to upload items for it to work. Using Vertex involves more steps and is, as demonstrated, not as good without optimizing the processing pipeline.

For indexing images one time, the price is the same for Vertex and Product Search: 1 USD per 10000 images. Product Search charges this monthly, while with Vertex one could store the embeddings much more cheaply. For querying, Product Search charges 4.50 USD per 1000 queries, while for Vertex the cost of querying would vary depending on how one would do it. If the goal is to find similar products one time, then this could be done in a Python workbench for a negligible cost. To query continuously, the cost of managing a database capable of KNN search would depend on many factors. One could perhaps assume that the cost to run a vector database such as Google Vector Search is similar to the cost of using Product Search.

Product Search has the capability of storing metadata with products, so that it is possible to filter search results. Using Vertex, one could of course filter and sort as one wished with any database system.

Vertex multimodal embedding also allows for embedding text strings in English in the same 1408-dimensional space as images, so searching your images with only text is not possible if using Product Search.